How Model Context Protocol (MCP) works: connect AI agents to tools

Read Time 9 mins | Written by: Cole

Each new data source or tool traditionally requires custom integration with an LLM – a resource-intensive and maintenance-heavy approach.

Anthropic's Model Context Protocol (MCP) addresses this challenge by providing a universal open standard for AI-to-tool integration.

- Seamless connection between AI agents and enterprise tools

- Standardized integration requiring less development and maintenance

- Improved AI capabilities through real-time data access and tool use

- Modular architecture enabling flexible deployment and scaling

MCP functions like a standardized interface – similar to how REST APIs standardized web service interactions – allowing any AI model to connect with any data source, regardless of who built them.

Anthropic thinks about MCP like a USB-C port for AI applications. Just like USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

How does MCP work?

MCP defines a unified way for AI Agents and LLMs to interface with external tools and data sources. It creates a common language for AI assistants and data systems to communicate.

At its core, MCP follows a client-server architecture where a host application can connect to multiple servers:

Key components

- MCP hosts: Programs like Claude Desktop, IDEs, or AI tools that want to access data through MCP

- MCP clients: Protocol clients that maintain 1:1 connections with servers

- MCP servers: Lightweight programs that each expose specific capabilities through the standardized Model Context Protocol

- Local data sources: Your computer’s files, databases, and services that MCP servers can securely access

- Remote services: External systems available over the internet (e.g., through APIs) that MCP servers can connect to

Through this architecture, MCP standardizes how AI systems discover and use tools and resources. An AI client can query any MCP server for available functions or data endpoints, and then call those functions consistently.

Agent capabilities enabled by MCP – external tools and real-time data

MCP enables AI assistants to interact with external tools and live data in real time. Instead of being limited to static training data, an MCP-enabled AI can actively query databases, call APIs, read files, or execute operations during a conversation.

Here's a look at how it works compared to function calling from Generative AI Lead at AWS, Eduardo Ordax:

MCP supports bidirectional communication between AI and external systems. An AI agent can both read data and perform actions through tools, enabling it to retrieve information and create or modify resources in a single interaction.

Tool use with MCP

- Access real-time information: Query databases, read files, and access up-to-date customer records

- Perform actions: Create support tickets, commit code changes, and modify resources

- Interact with business systems: Work with tools like Slack, Salesforce, Jira, and GitHub

When an AI agent is running, it can:

- Request available tools from connected MCP servers

- Decide to invoke the appropriate tool if a user's query requires external information

- Gain new "skills" on the fly, as if it has "eyes and hands" in the digital world

MCP gives Agents "eyes and hands" in the digital world – they can see current data and manipulate external systems as tools in a controlled way. This capability unlocks real-time awareness, eliminating outdated knowledge issues.

Security and permission control are integral to MCP's design. Each MCP server acts as a gatekeeper to its system, allowing organizations to enforce authentication, auditing, and authorization.

MCP vs traditional API integration

Traditional API integration for AI systems creates siloed, labor-intensive workflows. Developers must write custom code for each new data source, format the data appropriately, and feed it into the model.

MCP builds a unifying layer on top of existing APIs:

- An MCP server typically uses a system's native API internally but presents a standardized interface to any AI client

- Developers can write an MCP integration once and reuse it across many AI applications

- Teams maintain fewer API clients and custom pipelines, focusing instead on MCP servers that follow the open standard

Most importantly, MCP makes the AI an active participant – models can invoke tools at runtime through the protocol rather than receiving data passively.

MCP doesn't replace APIs for other purposes.

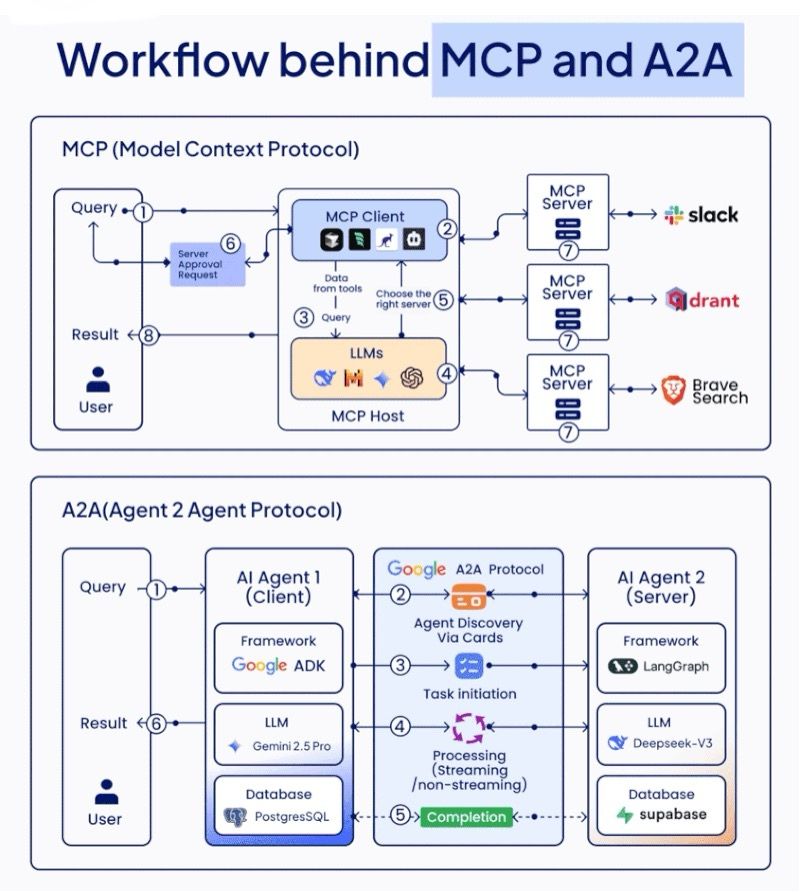

MCP vs Google Agent to Agent (A2A) protocol

MCP is about connecting a single AI agent to its tools, data, and external systems.

A2A is about enabling multiple AI agents to talk to each other – even if they come from different companies or use different frameworks.

Here’s a simple way to remember it:

|

Protocol |

What it does |

Type of integration |

|

MCP |

Agent ↔ Tools |

Vertical |

|

A2A |

Agent ↔ Agent |

Horizontal |

They solve different problems – but they can work together beautifully.

Here's another great diagram from Eduardo Ordax at AWS:

MCP servers: foundation for standardized AI integration

MCP servers connect LLMs to external tools and data sources through the standardized interface. They act as adapters that translate complex underlying systems into a standardized, accessible format.

How MCP servers function

MCP servers follow a client-server architecture based on the open specification. Each server provides:

- Tool discovery: Mechanisms for AI clients to find available functions (e.g., listing files, querying databases)

- Action execution: Methods allowing AI models to perform specific operations defined by the server

- Secure communication: A structured protocol ensuring safe interaction

For example, a Google Drive MCP server might offer functions like read_file() and list_documents(), while a database server might provide execute_query() and retrieve_data().

Examples of MCP servers in action

- Database MCP servers: Allow AI assistants to execute real-time SQL queries and deliver analytics-driven insights

- File storage servers: Enable AI models to read, summarize, and search documents in repositories like Google Drive or SharePoint

- API integration servers: Wrap business tools like Slack, Salesforce, and GitHub, allowing AI agents to interact seamlessly

Companies and technologies like Snowflake, Cloudflare, Kubernetes and more are building official MCP servers quickly and you can find a list of official MCP integrations here.

Implementing MCP servers in enterprise settings

Organizations can implement MCP through several approaches:

- Use pre-built MCP servers: Deploy ready-made MCP connectors for popular services like Google Drive, Slack, GitHub, or Postgres to reduce implementation time.

- Develop custom MCP servers: Build proprietary MCP servers using the open specification and available SDKs in Python, TypeScript, and Java.

- Deploy MCP-enabled AI clients: Use AI applications that act as MCP clients, either custom-built assistants or solutions like Anthropic's Claude or tools built with OpenAI's Agents SDK.

MCP enables cross-system workflows such as:

- IT support bots that access knowledge bases, device logs, and ticketing systems

- Development assistants that interact with logs, code repositories, and communication tools

The protocol creates a modular architecture where you can add or remove capabilities with minimal disruption.

MCP security challenges

While MCP offers significant benefits for AI integration, organizations must address new security concerns that come with the protocol.

Key MCP security challenges

- Access control vulnerabilities: MCP servers often request broad permission scopes to provide flexible functionality. This can result in unnecessarily comprehensive access to services (full Gmail access rather than just read permissions) and potential data over-exposure.

- Authentication risks: Weak or missing authentication between MCP clients and servers heightens the risk of unauthorized access to systems. Additionally, credential management becomes critical as MCP servers typically store authentication tokens for multiple services.

- Malicious MCP servers: Malicious servers from unofficial repositories can impersonate legitimate servers, potentially leading to security breaches. Organizations should verify the source of all MCP servers before implementation.

- Tool consent management: Many MCP implementations don't ask for user approval in a way that's clear or consistent. Some ask once and never ask again, even if the tool's usage later changes in potentially dangerous ways.

- Data aggregation risks: By centralizing access to multiple services, MCP creates unprecedented data aggregation potential that could enable sophisticated correlation attacks across services.

Security Best Practices

To mitigate these risks, organizations should:

- Implement fine-grained access controls: Restrict MCP servers to only the minimum permissions required for their function.

- Deploy robust authentication: Use strong authentication mechanisms between clients and servers, and implement secure credential storage.

- Isolate MCP components: Implement containerized deployment for MCP servers to ensure consistent security configurations and prevent environment conflicts.

- Establish clear consent frameworks: Develop transparent and consistent approval processes for tool usage, especially when actions could modify data or systems.

- Perform regular security audits: Continuously monitor MCP implementations for unusual access patterns or potential security vulnerabilities.

By addressing these security considerations from the outset, organizations can safely leverage MCP while minimizing potential risks.

The future impact of MCP

MCP addresses the gap between AI language model capabilities and practical business applications. While still in early stages, we expect the following developments:

Near-term (6-12 months)

- Initial adoption in tech, finance, and healthcare sectors

- Expansion of pre-built MCP servers for common enterprise tools

- Growth of open-source connectors designed for LLM interaction

Medium-term (1-2 years)

- Broader industry adoption as LLM implementation standardizes

- Integration of MCP capabilities into major enterprise software platforms

- Development of orchestration tools to manage multiple MCP connections

Long-term (3-5 years)

- MCP could become as fundamental to LLM implementations as standard networking protocols

- Interoperability between different LLM-based systems through the common standard

- Evolution to address specialized industry needs

Here's Anthropic's MCP roadmap for the next 6 months.

If you're considering MCP, start with specific high-value applications. Begin by connecting an LLM assistant to internal knowledge bases or enabling LLM development tools to access code repositories. As your team builds expertise, expand to more critical systems.

MCP transforms LLMs from a powerful siloed technology into practical business tools by connecting them to organizational data, systems, and tools in a standardized way.

Why learn to implement MPC now?

MCP is a brand new protocol and in the early days of adoption, but worth learning about and implementing in valuable use cases. It’s positioned to become a universal connector for AI systems and big technology companies are releasing their own MCP servers every week.

Competitive edge: Early adopters will scale AI integration faster than competitors struggling with custom solutions.

Technical efficiency: Standardized integration saves AI development time and reduces maintenance costs.

Smarter AI: Real-time data and tool access creates context-aware assistants that make better decisions.

Adaptable architecture: Modular design allows easy addition of new data sources without system rebuilds.

Start with high-impact use cases and expand methodically to build an AI infrastructure that enhances operations across your organization.

You can begin by connecting an LLM assistant to internal knowledge bases or enabling LLM development tools to access code repositories. As your team builds expertise, expand to more critical systems.

If you need technical help implementing MCP at your company, reach out and let's talk about it.

Additional Resources

- Anthropic MCP Documentation - Official documentation explaining MCP's capabilities and implementation

- Model Context Protocol GitHub Repository - Code, specifications, and SDKs for developers

- MCP Client Quickstart Guide - Step-by-step guidance for getting started

- Awesome MCP servers repo - A curated list of awesome MCP servers.

- Anthropic MCP Official Integrations- This list from Anthropic includes example MCP servers you can build and active MCP servers from Cloudflare, Snowflake, Kubernetes, etc.

Don't Miss

Another Update

new content is published

Cole

Cole is Codingscape's Content Marketing Strategist & Copywriter.