Best LLMs for coding: developer favorites

Read Time 15 mins | Written by: Cole

[Last updated: Oct. 2025]

The best LLMs that developers use for coding stand out by combining deep understanding of programming languages with practical capabilities that enhance a developer's workflow. They solve complex problems and deliver code that can be used to build production applications faster – not just vibe code a prototype.

These models don't just generate syntactically correct code, but understand context, purpose, and best practices across various languages, frameworks, and libraries.

Many of these coding LLMs are available to use in developer tools like Cursor, Codex, and GitHub Copilot. Software developers tend to have a favorite LLM for code completion and use a few different models depending on the specific task.

Here are some of the LLMs developers use the most for coding.

Developers’ favorite LLMs for coding

Up until September 2025, Anthropic's Claude LLMs had the best reputation with software engineers. That got cracked for many with infrastructure problems and unannounced extreme usage limits on expensive Claude Max plans that lead Claude Code users to abandon the platform for other coding LLMs.

Many developers still swear by Claude models for enterprise software engineering but Anthropic damaged their reputation and lost developers to OpenAI Codex, GLM 4.6, Gemini 2.5 Pro, and open source models like Qwen 3.

There may be a new coding LLM leading the pack by the time you read this, but there are some consistent favorites software engineers rely on.

Claude 4 models

Claude Sonnet 4.5

Claude Sonnet 4.5 - A significant upgrade delivering superior coding and reasoning while responding more precisely to instructions. Achieves state-of-the-art score of 77.2% on SWE-bench – balancing performance and efficiency for both internal and external use cases. It represents a significant leap forward from Sonnet 4, with dramatic improvements in autonomous coding, extended thinking, and real-world software engineering tasks.

Key capabilities:

- State-of-the-art SWE-bench performance: Achieves 77.2% on SWE-bench Verified in standard mode (82.0% with parallel test-time compute), leading all models on this critical real-world software engineering benchmark

- Terminal mastery: Scores 50.0% on Terminal-Bench (61.3% with extended thinking enabled), becoming the first model to crack the 60% barrier on this challenging agentic coding benchmark

- Extended autonomous operation: Can work independently for 30+ hours on complex tasks while maintaining focus and context, enabling true long-running agent workflows

- Massive output capacity: Supports up to 64K output tokens, perfect for generating complete applications, comprehensive documentation, or complex refactors

- Context window: 200K tokens, allowing for deep understanding of large codebases

- Tool execution excellence: Reduced tool call error rates by 91% compared to Sonnet 4 in internal testing, with some partners reporting drops from 9% to 0% error rates

- Parallel tool execution: Can run multiple bash commands and tools simultaneously for maximum efficiency

- Enhanced instruction following: More precise adherence to coding specifications and requirements than previous models

- Extended thinking mode: Optional deeper reasoning for complex problems (available via API and web interface)

- Computer use leadership: Scores 61.4% on OSWorld benchmark, far ahead of competitors in real-world computer interaction tasks

- API access: Available via Anthropic API at $3 per million input tokens and $15 per million output tokens (same pricing as Sonnet 4)

Cursor CEO Michael Truell calls it "state-of-the-art for coding" with dramatic improvements in complex codebase understanding. GitHub reports that Sonnet 4.5 "soars in agentic tasks" and will power the new coding agent in GitHub Copilot. Devin saw planning performance increase by 18% and end-to-end eval scores jump by 12%. Multiple teams report that Sonnet 4.5 is the first model to meaningfully boost code quality during editing and debugging while maintaining full reliability.

Claude Opus 4.1

Claude Opus 4.1 - Claude Opus 4.1 excels at coding and complex problem-solving, powering frontier AI agents. While now surpassed by Sonnet 4.5 on engineering benchmarks like SWE, it remains strong for sustained performance on long-running tasks requiring focused effort over several hours.

Key capabilities:

- State-of-the-art SWE-bench performance: Achieves 74.5% on SWE-bench Verified, advancing Anthropic's coding leadership

- Multi-file code refactoring excellence: GitHub reports particularly notable performance gains in handling complex multi-file refactors

- Precision debugging: Rakuten Group finds Opus 4.1 excels at pinpointing exact corrections within large codebases without making unnecessary adjustments or introducing bugs

- Significant improvement over Opus 4: Windsurf reports a one standard deviation improvement on their junior developer benchmark—roughly the same performance leap as the jump from Sonnet 3.7 to Sonnet 4

- Enhanced research and analysis: Improved in-depth research and data analysis skills, especially around detail tracking and agentic search

- Extended thinking with tool use (beta): Can use tools during extended thinking (up to 64K tokens) for deeper reasoning

- TAU-bench leadership: Strong performance on agentic tasks with extended reasoning

- Hybrid reasoning modes: Offers both near-instant responses and extended thinking for complex problems

- API access: Available via API with four Agent capabilities—code execution tool, MCP connector, Files API, and prompt caching for up to one hour

- Pricing: $15 per million input tokens, $75 per million output tokens (same as Opus 4)

Opus 4.1 delivers meaningful improvements in precision debugging and multi-file code operations, with teams praising its ability to make surgical corrections in large codebases without introducing bugs. However, for new projects, Anthropic recommends Claude Sonnet 4.5, offering superior performance (77.2% vs 74.5% on SWE-bench) at just 1/5th the cost, plus 30+ hour autonomous operation and extended output capacity.

OpenAI GPT-5 models

Released August 2025, GPT-5 is OpenAI's latest flagship model and represents a fundamental shift in their model architecture. It's a "unified" model that combines the reasoning capabilities of the o-series with the fast responses of previous GPT models, using a real-time router to intelligently decide when to engage deep thinking.

Key capabilities:

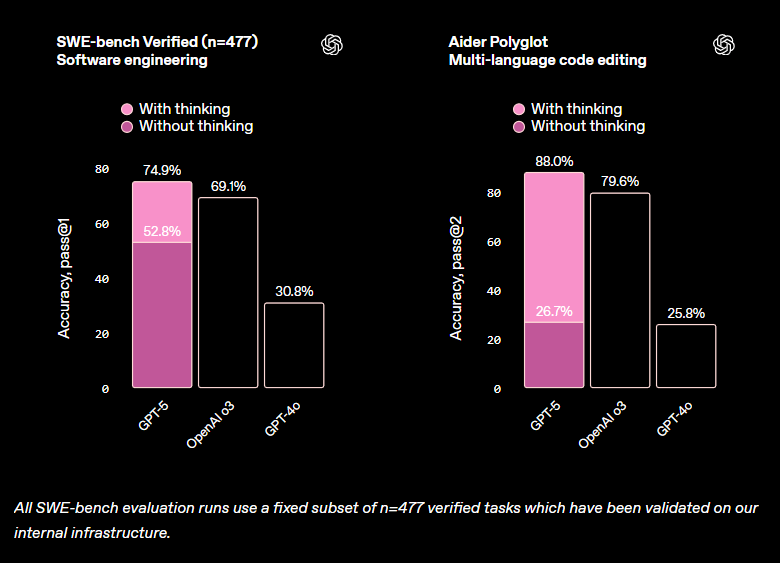

- State-of-the-art coding performance: Scores 74.9% on SWE-bench Verified, ahead of o3 (69.1%) and many competitors

- Exceptional code editing: Achieves 88% on Aider Polyglot, representing a one-third reduction in error rate compared to o3

- Massive context window: 400K tokens combined input/output (128K output tokens), enabling analysis of entire codebases

- Efficient reasoning: Uses 22% fewer output tokens and 45% fewer tool calls than o3 to achieve better results at high reasoning effort

- Front-end excellence: Early testers report GPT-5 beats o3 in front-end development 70% of the time, with superior aesthetic sensibility, typography, spacing, and default layouts

- Agentic tool intelligence: Achieves 96.7% on τ-bench telecom, demonstrating ability to chain together dozens of tool calls reliably

- Custom tools: Supports plaintext tool inputs instead of JSON, avoiding escaping issues for large code blocks

- Reduced hallucinations: 80% fewer factual errors compared to previous models, with only 4.8% hallucination rate when using thinking mode

- Competitive pricing: $1.25 per million input tokens and $10 per million output tokens (significantly cheaper than Claude models)

- Multiple variants: GPT-5 mini offers near-identical performance at 1/5th the cost with only 5 percentage points lower on benchmarks

GPT-5 particularly shines at complex front-end generation, debugging large repositories, and creating beautiful, responsive websites and apps with aesthetic sensibility in just one prompt. The model's built-in thinking architecture means it automatically decides when to engage deeper reasoning for complex problems while staying fast for simpler tasks.

Gemini 2.5 Pro w/ Deep Think

Google's Gemini 2.5 Pro has emerged as a formidable competitor, now topping the WebDev Arena coding leaderboard and showing particular strength in full-stack development. The Deep Think mode, rolled out in August 2025, adds enhanced reasoning capabilities for highly complex problems.

- WebDev Arena leadership: Currently leads this popular coding leaderboard, demonstrating real-world web development excellence

- Deep Think reasoning mode: Enhanced mode that considers multiple hypotheses before responding, using parallel thinking techniques to generate and evaluate many ideas simultaneously

- Top LiveCodeBench performance: Now scores 87.6% on LiveCodeBench v6 (competition-level coding), up from 80.4% in May 2025

- SWE-bench performance: Achieves 63.8% on SWE-bench Verified (as of latest public data)

- Exceptional multimodal understanding: 84.8% score on VideoMME for video understanding, plus 84.0% on MMMU for multimodal reasoning – particularly valuable for visual programming contexts

- Massive context window: 1 million tokens (2 million coming soon), enabling understanding of entire codebases and repositories

- Full-stack expertise: Balanced capabilities across frontend and backend development in multiple programming languages

- Thought summarization: Provides organized, clear explanations of reasoning process with headers and key details

- Configurable thinking budgets: Allows developers to control the balance between response quality and latency

- Code execution and tools: Deep Think works with code execution, Google Search, and other tools during inference

- Iterative development strength: Excels at tasks that require building something complex piece by piece

- Mathematical prowess: Achieves Bronze-level performance on 2025 International Mathematical Olympiad (IMO) benchmark

Gemini 2.5 Pro consistently gets compared to the latest LLMs from Anthropic and OpenAI.

DeepSeek V3.2 & R1

DeepSeek R1 shocked the AI community in 2025 by demonstrating competitive performance against leading frontier models at a fraction of the cost. The latest DeepSeek-V3.2-Exp (September 2025) introduces innovative sparse attention mechanisms while maintaining strong coding capabilities.

Key capabilities:

- Mixture of Experts architecture: 671 billion parameter MoE model with 37 billion activated parameters per token

- Mathematical prowess: Exceptional at mathematically intensive algorithms with 97.3% on MATH-500 benchmark

- SWE-bench performance: 49.2% on SWE-bench Verified, slightly ahead of OpenAI's o1-1217 at 48.9%

- Advanced code generation: 96.3% percentile ranking on Codeforces, demonstrating strong algorithmic reasoning

- Cost efficiency: Approximately 15-50% of the cost of models like OpenAI's o1 (DeepSeek V3.2 API costs just 0.28¢ per million tokens)

- Open-source flexibility: MIT license allowing commercial use and modifications

- Distilled variants: Smaller distilled models based on Llama and Qwen for specific use cases

- Specialized reasoning: DeepSeek R1 focuses on advanced reasoning while DeepSeek V3 is better for general coding tasks

- Transparent thinking process: Reasoning steps are visible to users, allowing for better understanding and debugging

While DeepSeek can perform some advanced coding tasks, many senior engineers report using it as a secondary model, often leveraging its cost advantages for specific types of problems like mathematical optimization and algorithmic development.

GLM-4.6

GLM-4.6 is the latest flagship model from Zhipu AI (Z.ai), a Chinese AI company making significant waves in the global LLM landscape. It's positioned as a more affordable alternative to Claude and GPT models while delivering competitive performance, particularly in coding and agentic tasks.

Key capabilities:

- Strong SWE-bench performance: Achieves 68.0% on SWE-bench Verified, ahead of its predecessor GLM-4.5 (64.2%) and near Claude Sonnet 4 (67.8%), though behind Claude Sonnet 4.5 (77.2%)

- LiveCodeBench leadership: Scores 82.8% on LiveCodeBench v6, approaching Claude Sonnet 4's 84.5% and well ahead of GLM-4.5's 63.3%

- Advanced reasoning: Scores 93.9% on AIME 25 math reasoning (98.6% with tool use), competitive with Claude Sonnet 4 (87.0%)

- Agentic coding excellence: Near parity with Claude Sonnet 4 in real-world multi-turn development tasks (48.6% win rate in CC-Bench-V1.1)

- Expanded context window: 200K tokens, up from 128K in GLM-4.5, enabling handling of more complex agentic tasks

- Front-end polish: Developers report noticeably better front-end output with visual polish that usually requires manual cleanup

- Tool integration: Successfully integrates with Claude Code, Roo Code, Cline, and Kilo Code

- BrowseComp performance: Scores 45.1%, nearly doubling GLM-4.5's 26.4% on web browsing tasks

- Refined writing: Better alignment with human preferences in style and readability

- Exceptional value: GLM Coding Plan offers "Claude-level performance at 1/7th the price with 3x the usage quota"

- Open-source availability: Being released on Hugging Face and ModelScope under MIT license

GLM-4.6 is particularly strong at chaining search, retrieval, or computation tools during inference without wasting context. This makes it excellent for agent frameworks where maintaining state across dozens of steps is critical.

While impressive, Claude Sonnet 4.5 still leads in raw coding power for the most complex debugging and code reasoning tasks. GLM-4.6 is best positioned as a cost-effective alternative for some development work rather than the absolute cutting edge.

Multi-model approach to coding with LLMs

Many developers use multiple models through platforms like Cursor or specialized IDE integrations like Codex CLI – leveraging different models' strengths for specific coding tasks rather than relying on a single LLM for every task.

- Code completion and FIM: Claude Sonnet 4.5 provides exceptional code completion with advanced pattern recognition, adapting precisely to existing code styles. GPT-5 is also frequently used for fast, high-quality completion.

- Architecture and design: Claude Sonnet 4.5 excels at system design, planning large refactors, and maintaining consistency across complex codebases

- Algorithm development: DeepSeek R1 and GLM-4.6 are particularly strong for mathematically intensive algorithms and optimization problems

- Security auditing: GPT-5 and Claude models demonstrate superior capabilities in identifying security vulnerabilities

- Documentation generation: Gemini 2.5 Pro with its thought summarization features creates exceptionally clear and comprehensive documentation

- Front-end development: GPT-5 and Claude Sonnet 4.5 both excel at creating beautiful, responsive UIs with aesthetic sensibility

- Multi-language projects: Models with strong cross-language capabilities like GPT-5 and Gemini 2.5 Pro help with projects spanning multiple programming languages

- Educational settings: More affordable options like GPT-5 mini, GLM-4.6, or DeepSeek's distilled models provide excellent learning tools

- Long-running agentic tasks: Claude Sonnet 4.5 stands out for its ability to work autonomously for 30+ hours, while Gemini 2.5 Deep Think excels at iterative development requiring extended reasoning

Aside from developer’s preferences, you can get an idea of how good an LLM is at coding with industry benchmarks.

Coding LLM benchmarks

These are the leading benchmarks for evaluating LLMs in software development – from Python-specific tasks to real-world software engineering tasks.

-

SWE-bench - The industry standard for agentic code evaluation, measuring how models perform on real-world software engineering tasks pulled from actual GitHub issues.

-

Terminal-Bench - Tests models' abilities to operate in a sandboxed Linux terminal environment, measuring practical command-line work and tool usage.

-

LiveCodeBench - A holistic and contamination-free evaluation benchmark that continuously collects new competition-level coding problems, focusing on broader code-related capabilities.

-

Aider Polyglot - Tests models' abilities to edit source files to complete coding exercises across multiple programming languages using diffs.

-

HumanEval - Developed by OpenAI, this benchmark evaluates how effectively LLMs generate code through 164 Python programming problems with unit tests.

-

BigCodeBench - The next generation of HumanEval, focusing on more complex function-level code generation with practical challenging coding tasks.

-

WebDev Arena - Measures real-world web development performance with head-to-head comparisons on frontend and full-stack tasks.

-

DevQualityEval - Focuses on assessing models' ability to generate quality code across multiple languages including Java, Go, and Ruby.

-

Chatbot Arena - Provides head-to-head comparisons of various models on coding and general tasks based on human preferences.

-

MBPP - Mostly Basic Python Problems benchmark assesses code generation through more than 900 coding tasks.

These benchmarks help developers compare key metrics such as debugging accuracy, code completion capabilities, and performance on complex programming challenges.

Which LLM should my team use for coding?

Different models excel in different areas:

- Production development at scale: Claude Sonnet 4.5

- Front-end & beautiful UIs: GPT-5

- Cost-conscious development: GLM-4.6 or GPT-5 mini

- Multimodal & full-stack: Gemini 2.5 Pro

- Mathematical optimization: DeepSeek R1

Most experienced developers take a multi-model approach – leveraging specialized strengths for specific tasks through platforms like Cursor and Copilot.

The ideal LLM depends on your specific needs:

- Code completion and maintenance

- Architecture design

- Algorithm development

- Documentation generation

- Code refactoring

Ultimately, experiment with several options to find the combination that best enhances your development workflow and delivers the quality results your projects demand.

Need help choosing which coding LLMs to use at your company?

We can help you decide which coding LLMs are best for your teams, tech stack, and goals. Our senior engineering teams already use AI coding tools to build production-ready software.

And if you need to hire an AI-augmented team of senior software engineers to speed up delivery, we can assemble them for you in 4-6 weeks. It’ll be faster to get started, more cost-efficient than internal hiring, and we’ll deliver high-quality results quickly.

Zappos, Twilio, and Veho are just a few companies that trust us to build their software and enterprise systems.

You can schedule a time to talk with us here. No hassle, no expectations, just answers.

Don't Miss

Another Update

new content is published

Cole

Cole is Codingscape's Content Marketing Strategist & Copywriter.